This was Jesse's Master's thesis project that we got to collaborate on. By this point in time, our lab had been researching Organic User Interfaces and flexible display interaction techniques that involved bending/twisting the display for years, but Jesse had this idea of combining touch-input with bend-input that had not been evaluated before. We built a lot of custom electronic hardware and wrote the software to run on e-Ink flexible displays running on AM300 kits and then tested the performance of bend + touch input on a flexible mobile device.

There was a lot that needed to be done in terms of hardware to even get close to having a prototype that could be used to measure performance in a task that involved both bending, as well as multitouch gestures.

Here were the basic requirements:

- Multitouch input: for pinch-to-zoom interactions

- Bend input: because we wanted to map the degree of bend to the rate of flipping through content

There were no flexible multitouch sensors available that we could just slap on, so we came up with a solution that I personally think was quite clever.

You see, we found a flexible Wacom sensor that could be used to detect a single "touch". Then we made a flexible circuit (designed, etched and soldered by us on Pyrolux) with a grid of hall-effect sensors. We sandwiched the flexible circuit with the flexible Wacom sensor, and thus, by instrumenting a user with the tip of a Wacom styles on the index finger, and a magnet on the thumb, we had our own multitouch sensor that could bend!

We used FlexPoint bend sensors hooked up to an Arduino to detect bend input.

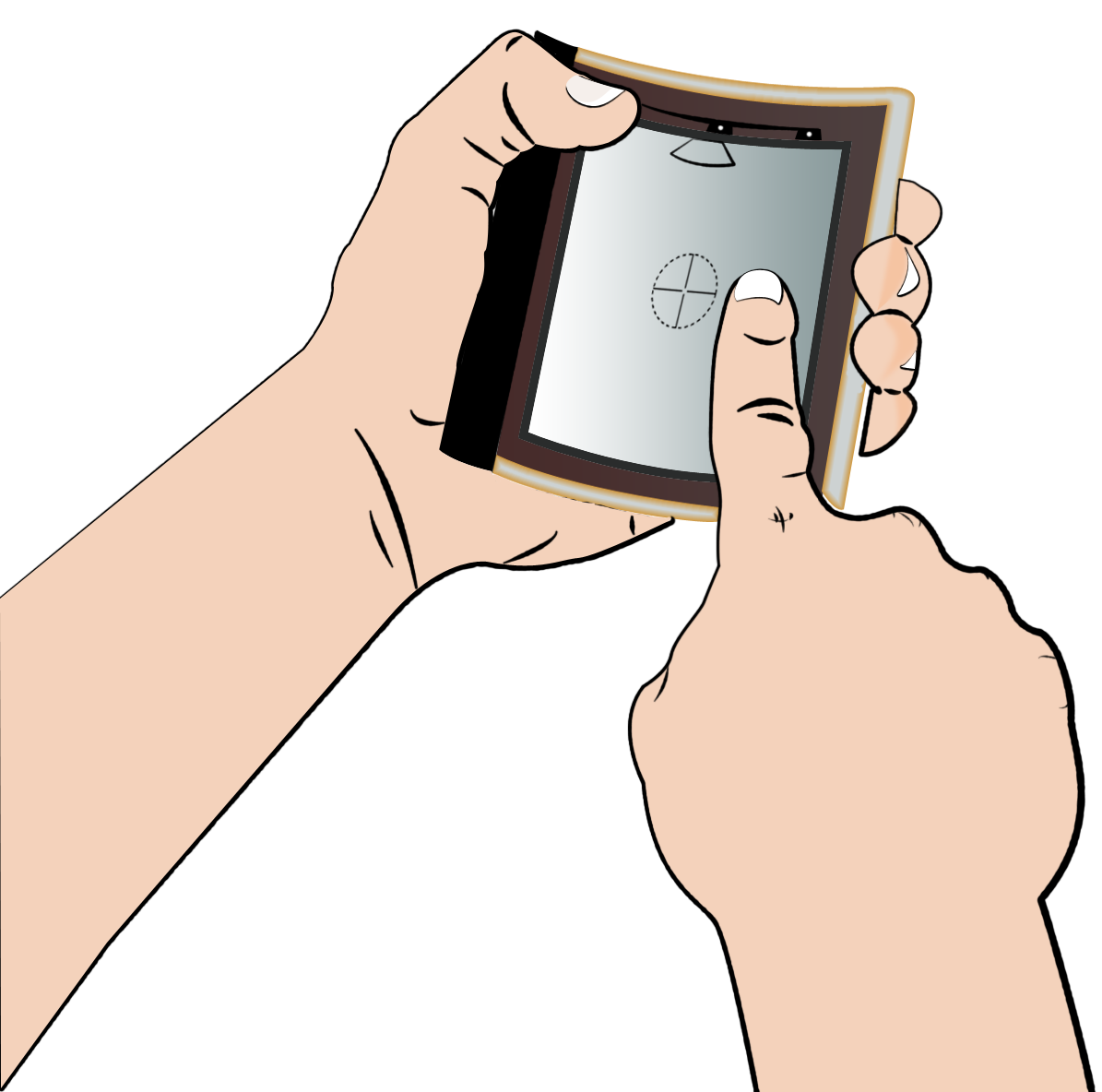

This is what the assembly looked like in use:

Finally, here's a video of the system in action:

Software: C# with WPF

Hardware: Vicon Motion Capture cameras, designed and built custom markers used to track hand and head position

Excerpt from the paper published at TEI'13:

We present FlexView, a set of interaction techniques for Z- axis navigation on touch-enabled flexible mobile devices. FlexView augments touch input with bend to navigate through depth-arranged content. To investigate Z-axis navigation with FlexView, we measured document paging efficiency using touch, against two forms of bend input: bending the side of the display (leafing) and squeezing the display (squeezing). In addition to moving through the Z- axis, the second experiment added X-Y navigation in a pan- and-zoom task. Pinch gestures were compared to squeezing and leafing for zoom operations, while panning was consistently performed using touch.

Our experiments demonstrate that bend interaction is comparable to touch input for navigation through stacked content. Squeezing to zoom recorded the fastest times in the pan-and-zoom task. Overall, FlexView allows users to easily browse depth arranged information spaces without sacrificing traditional touch interactions.

Related Publication(s)