About 8-months into my Master's program, my advisor (Roel) gave me an idea to work on a project that ended up becoming a precursor to my thesis. The idea was to use the lab's Vicon Motion Capture system to build a video blending application. By this time, I was already looking at prior research in interaction design for large displays, and this seemed like a promising (and interesting) project to work on.

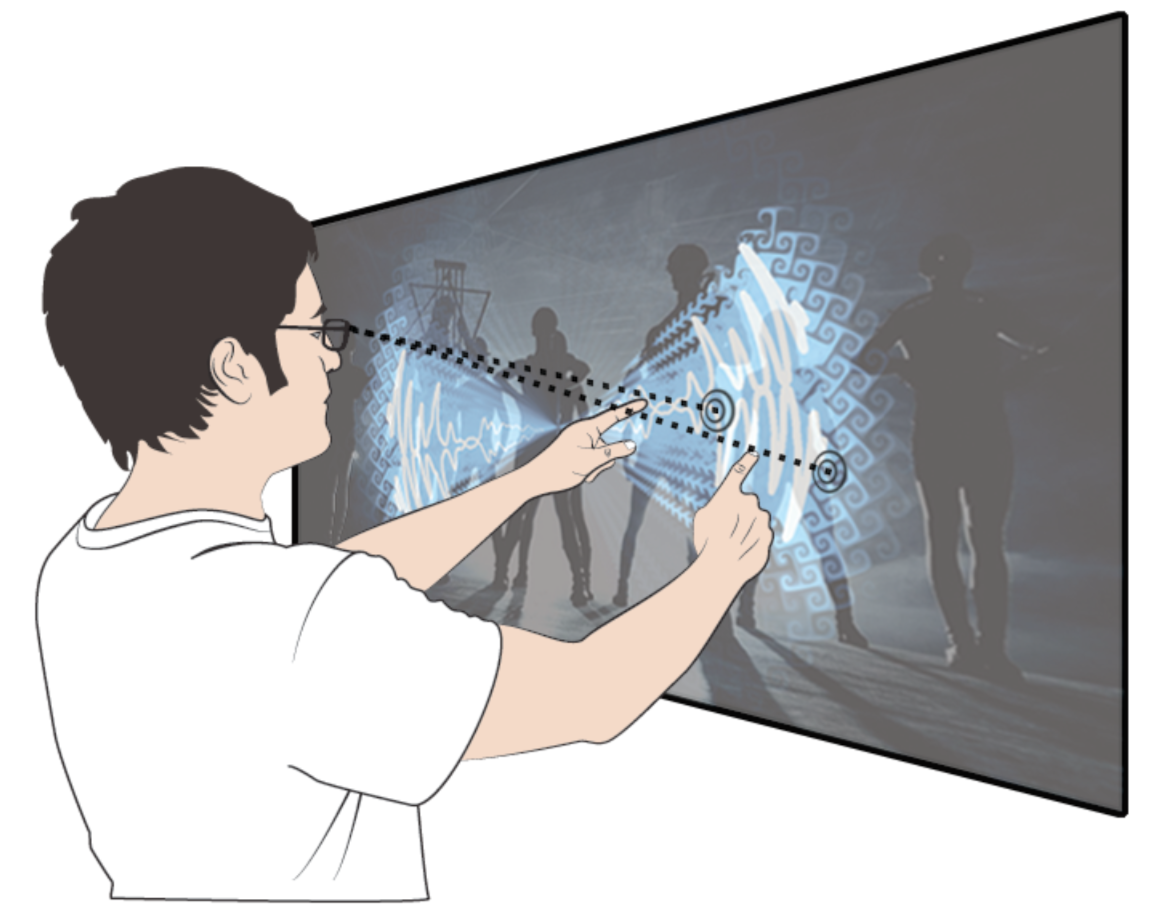

Using a mix of perspective based pointing (i.e. head-tracking + tracking the position of the index finger in each hand), a "cursor's" position was determined. With a couple of cursors – one for each hand – we could perform bimanual in-air gestures to move + resize + rotate videos, change their opacity, make then go faster or slower etc. Overall, there was a performative/interactive aspect to this project that was quite promising.

On a tangential note: up to that point, I was still struggling at the lab, and fairly short of confidence. Luckily for me, Roel paired me up with another student/colleague (Jesse Burstyn), and that was an immense help. Not only was Jesse very very competent, but he was also a genuinely good human being; he became a close friend of mine. To me, this project was the marker/start of a period of successful (though stressful) research. Although I did not know it at the time, in hindsight, Waveform was one of my most important research projects.

Here's a video of this in action (pardon the poor quality):

Software: C# with WPF

Hardware: Vicon Motion Capture cameras, designed and built custom markers used to track hand and head position

Excerpt from the paper published at CHI'10:

One of the most important characteristics of VJing is the capacity to have live control over media. VJs create a real-time mix using video content that is pulled from a media library that resides on a laptop hard drive, as well as computer visualizations that are generated on- the-fly. VJs typically rely on a workspace with multiple laptops and/or video mixers set on a table. However, with this setup, there is a disconnect between the instrument, the VJ performance and the visual output. For example, VJs cannot typically see the effect of specific videos on the large screen. In addition, the audience experience of the VJ’s creative expression is limited to that of watching her press buttons on her laptop.

To address these issues, we investigated ways in which videos can be directly manipulated on the large screen. To avoid VJs from blocking the screen, we looked at solutions that allowed for remote gestural control of the visual content. In this paper, we discuss WaveForm, a computer vision system that uses in-air multi-touch gestures to enable video manipulation from a distance. Our system supports video translation, resize, scale, blend, as well as layer operations through specific in-air multi-touch gestures. To allow the large display to only be used for rendering the result, the WaveForm VJ uses a tablet computer as a private media palette. VJs select and cue videos and music tracks on this palette through multitouch gestures, which are then transferred to and manipulated on the audience display via in-air gestures.

Related Publication(s)